GitHub - Laurae2/amd-ds: Data Science: AMD/OpenCL GPU Deep Learning: Setup Python + Caffe/XGBoost + 1.7x RAM

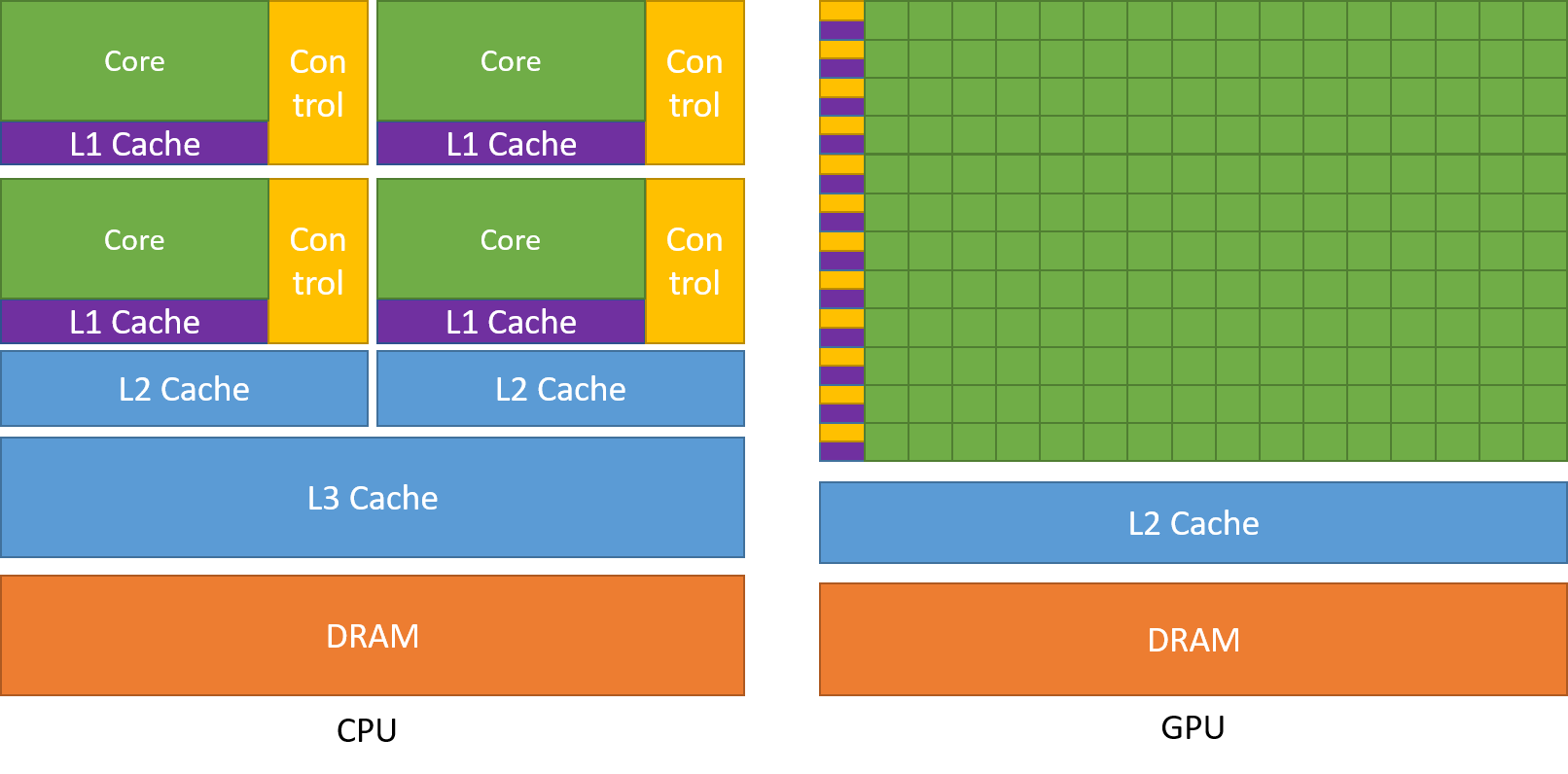

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

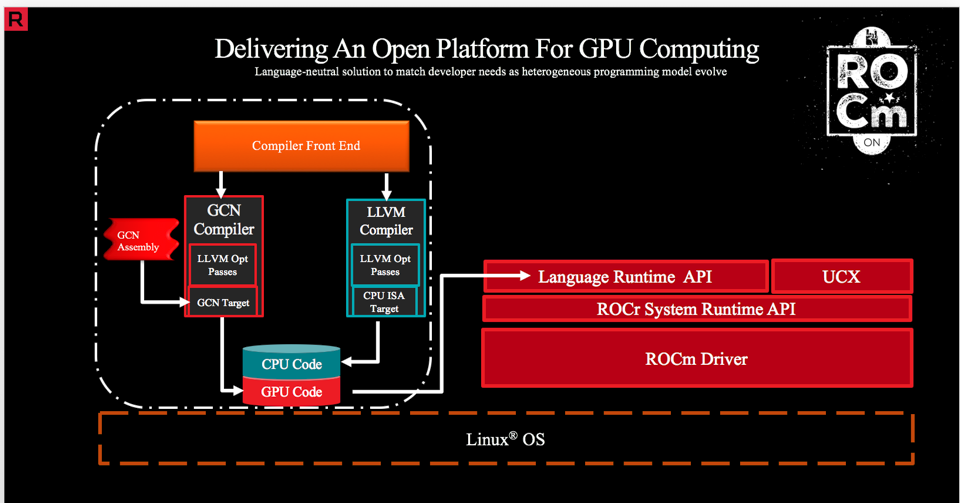

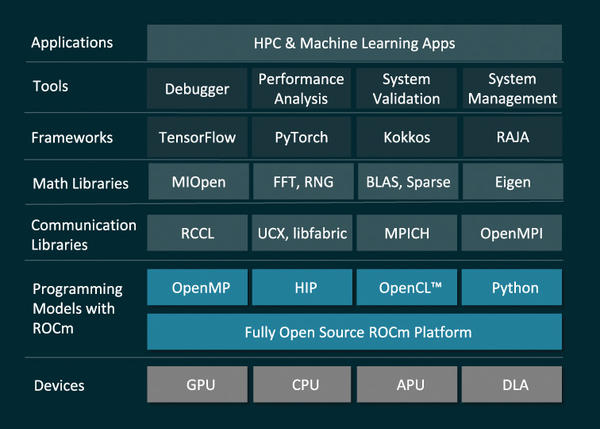

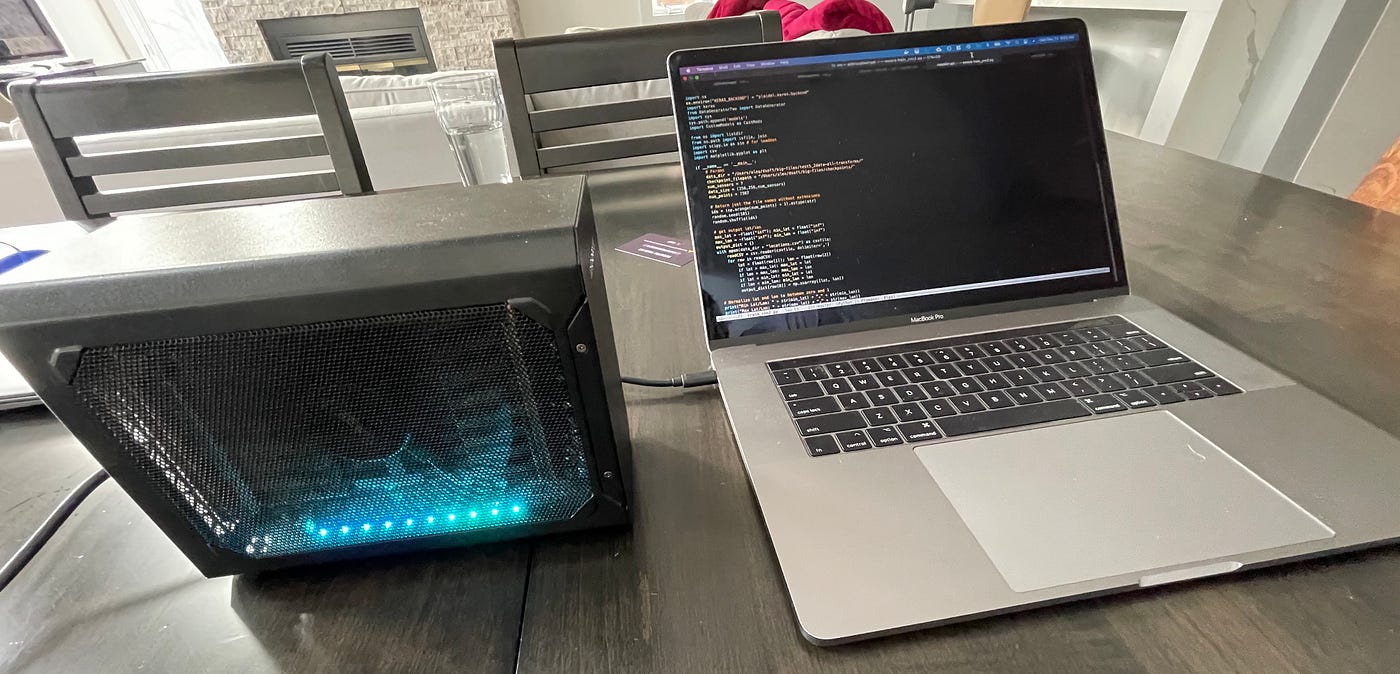

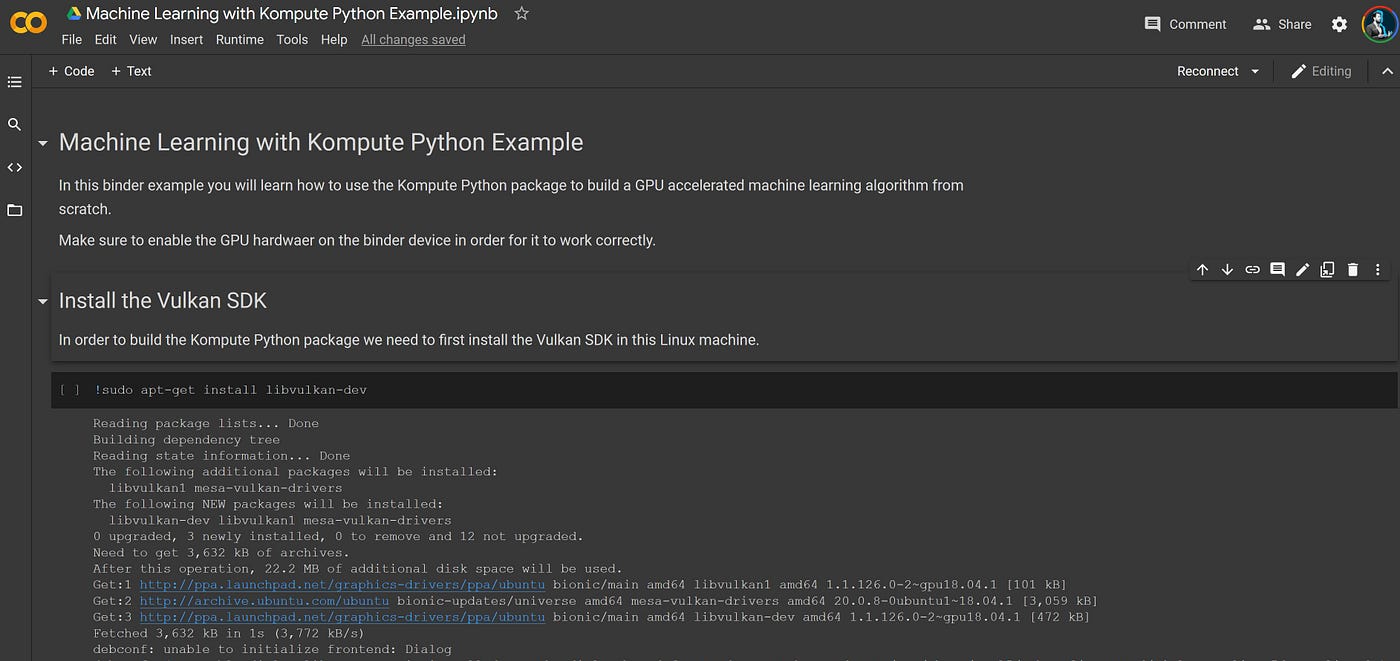

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

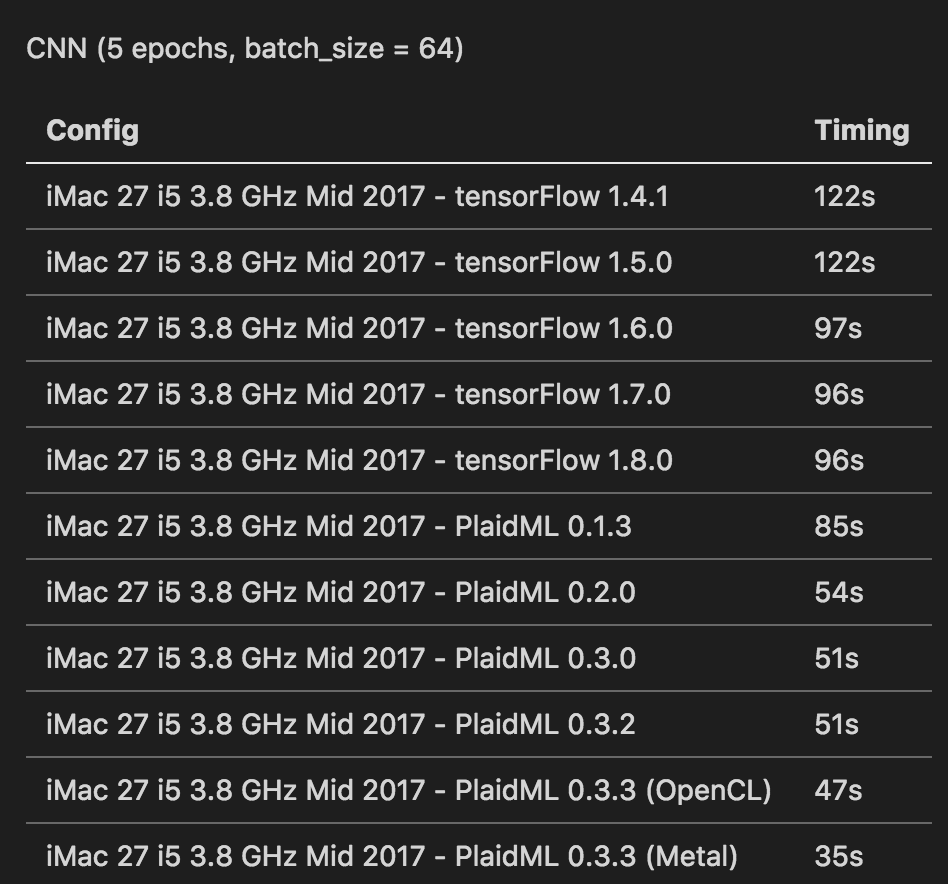

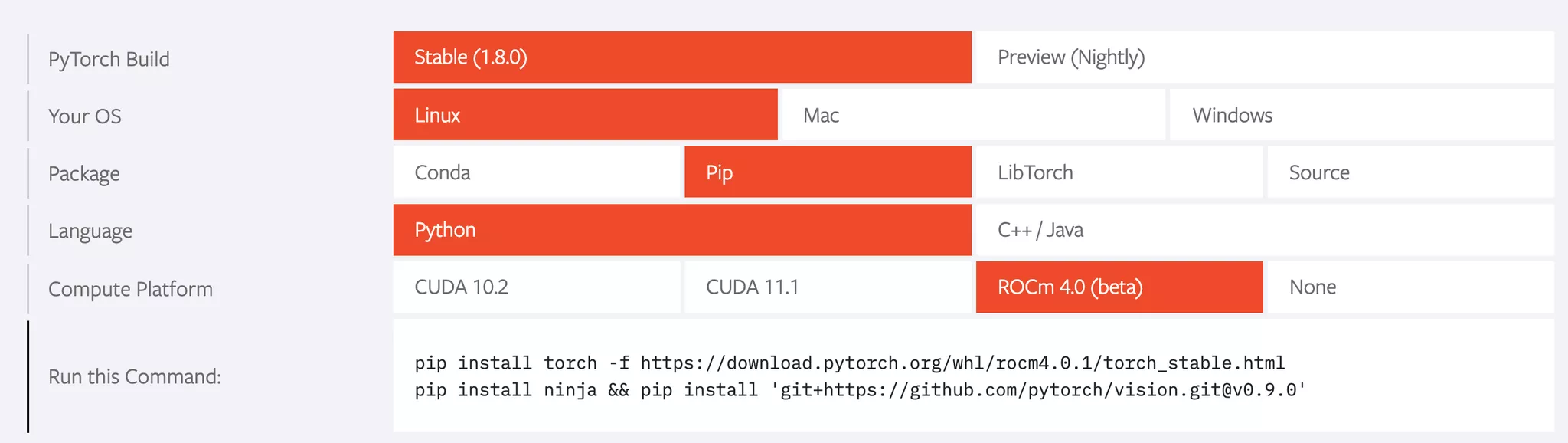

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

AMD or Intel, which processor is better for TensorFlow and other machine learning libraries? - Quora

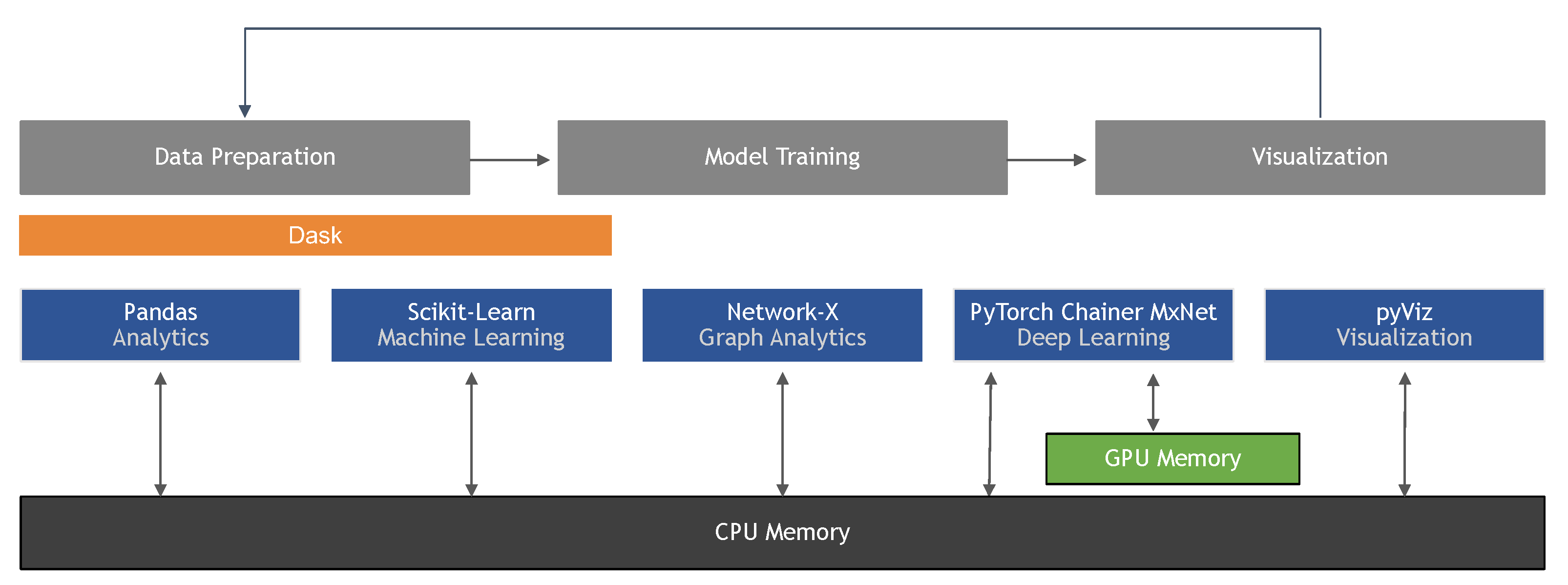

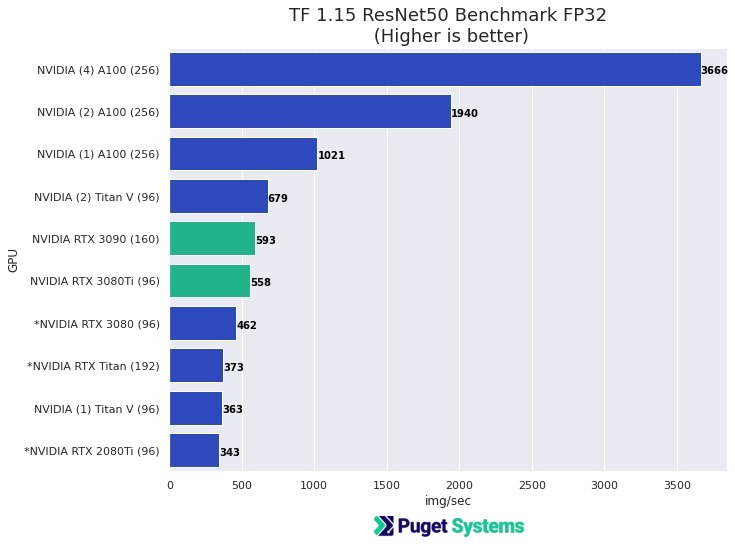

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science